Fokus

IT Strategy

Wir unterstützen Sie bei der Umsetzung Ihrer Unternehmensstrategie vom Ist-Zustand zum Ziel-Zustand. Abgeleitet aus den Anforderungen Ihrer Geschäftsziele, Visionen und den IT-Rahmenbedingungen heraus.

IT Tactics

Taktische Maßnahmen bilden mit IT-Operations und der IT-Strategie die Ebenen des IT-Managements. Unser Leistungsportfolio reicht von der Einführung eines KPI-gestützten Reportings bis hin zu Disaster Recovery Prozessen.

IT Security

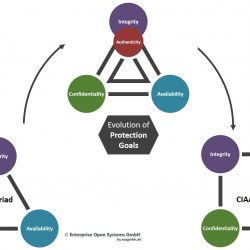

Advanced Persistent Threats (APTs) sind komplexe, industrialisierte Projekte mit Sponsoren, einem Projektteam, einem Budget, einem Zeitplan und einem Plan. Informationssicherheit ist also kein Zufall. Sie ist auch ein komplexes Projekt mit Sponsoren, einem Projektteam, einem Budget, einem Zeitplan und einem Plan.

Excellence in execution

Information Security Management

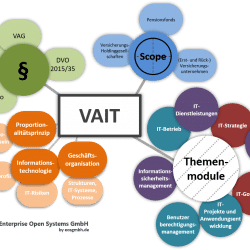

Die Enterprise Open Systems (EOS) ist eine hochspezialisierte IT Consulting Manufaktur mit dem Kompetenzschwerpunkt Information Security Management.

In diesem sensiblen Kontext leisten wir unseren Partnern wichtige Beiträge bei der Gestaltung ihrer IT-Strategie und Taktik, um die gesetzten Security Ziele mit Sicherheit zu erreichen.

Wir sind branchenübergreifend tätig, verfügen jedoch über ausgezeichnete Erfahrungen in den Bereichen Finanzdienstleistungen, Versicherungen, Pharma, Chemie und Cloud-Anbieter.

Unsere Mitarbeiter verfügen über jahrzehntelange Expertise in den verschiedenen Aspekten der IT-Sicherheit und sind anerkannte Spezialisten mit verschiedenen Zertifizierungen.

Mehrwerte für Ihr Unternehmen

Die Enterprise Open Systems unterstützt ihre Kunden mit KnowHow, Erfahrung und Leidenschaft

Governance

Risiken